Loading data - hands-on

This manual explains how to load data in Fabric using various notebooks for different loading methods. The following default loading notebooks are available:

- DAG \ DAG_Gold

- DAG \ DAG_Multiloader

- Loaders \ Load_bronze

- Loaders \ Load_silver

- Loaders \ Copy_Data_Lakehouse

And there are some temporary notebooks:

- Loaders \ Load_history

- Loaders \ Object_maintenance

Each notebook includes a description in its Markdown cells. This manual focuses on the workflow and how these notebooks can be used in daily operations.

Known limitations

Keep in mind that the default options for Fabric are relatively new and sometimes limited. For example, not all options normally available for a DAG (Directed Acyclic Graph) are supported yet. As a result, in some cases, we use a simpler approach, even though a full DAG can handle more advanced tasks. We use RunMultiple to run the DAG configurations.

When opening a notebook and change the contents, it will be saved to the workspace automatically. Those changes will affect a scheduled load when there is no new deployment in between. So it's better to call the notebook from a personal notebook via a Run statement. At the end some examples are provided.

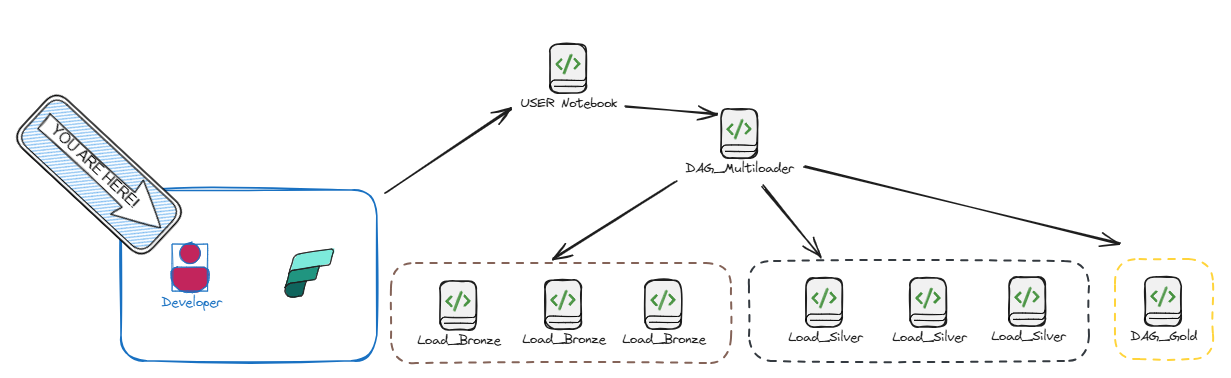

DAG Multiloader

This is the most important notebook and can load the complete Fabric environment for a workspace (e.g., DEV, TST, PRD). In PRD, this notebook should be scheduled to load the workspace with fresh data.

The Multiloader notebook has parameters to configure which layers (Bronze, Silver, Gold) to load. You can also use the folder_path parameter to filter objects. This only works for the Bronze and Silver layers, because Gold has nothing to do with the Objects files.

How it works

All metadata is read from the Files section of the Meta lakehouse (Meta). The folder_path parameter is used and only considers files in the specified subfolders. This makes it easy to filter by one source system by providing the folder path. The default is Files\Objects, which is the minimum.

The load_meta_data.get_objects_by_folder function reads the object files, and for each object, it also reads the connection information. This is important because some settings can be configured at the connection level as well.

Concurrency and load order

An important setting for loading data is concurrency. Some connections allow only one connection to the source at a time. You can set concurrency at the connection level or object level. You can also set up a load order per layer. The default load order is 0. To load something before the default, set the load order to -10, for example. It's possible to leave gaps for later use. The Multiloader will identify all unique load orders from the metadata. The load order can be set for Bronze and Silver at both the object and connection levels. The object-level setting takes precedence over the connection-level setting.

Bronze

For each load order, a DAG is created and run. It starts with the lowest load order and continues until all load orders for Bronze are complete.

Dag Task

The DAG that is created includes a list of tasks (depending on the filtered objects). The tasks for Bronze call the Load_bronze notebook, supplying the object YAML file as a parameter. Optionally, you can supply the skip_notebookbronze parameter. This can be convenient during testing to avoid unnecessary steps, like fetching the latest data from the source. Each task has a timeout of 3600 seconds and one retry after 30 seconds.

On the DAG object that is returned, the concurrency and a default timeout of 83200 seconds are also set. For the rest, it's a pretty basic DAG configuration.

Silver

The Silver part works almost the same as the Bronze part. In this case, each task calls the Load_silver notebook, supplying the object YAML file as a parameter.

Silver Tasks

For Silver, the DAG tasks work similarly, except there is no skip_notebookbronze parameter. The default timeout is 1800 seconds with one retry after 30 seconds.

This step only runs if the Bronze step succeeds without errors that cancel the notebook, or if Bronze is skipped in the layers parameter.

Gold

Gold starts the DAG_Gold notebook, providing the model.yaml configuration from the model_file parameter.

Multi model

If there are multiple models to load, you can clone the Gold box and configure it with the desired model file

How to run a notebook without touching the original

Open your own personal notebook in the User folder and use below script to call the DAG_Multiloader.

import json

# DAG Multiloader

folder_path = "Files/Objects"

skip_notebookprebronze = False

model_file = "Files/Model/DM/model.yaml"

layers = ["Bronze", "Silver", "Gold"]

params = {

"folder_path": json.dumps(folder_path),

"skip_notebookprebronze": json.dumps(skip_notebookprebronze),

"model_file": json.dumps(model_file),

"layers": json.dumps(layers)

}

notebookutils.notebook.run("DAG_Multiloader", 83200, params)

Examples

I want to load all objects for the Bronze layer and skip the pre bronze notebooks

import json

# DAG Multiloader

folder_path = "Files/Objects"

skip_notebookprebronze = True

model_file = "Files/Model/DM/model.yaml"

layers = ["Bronze"]

params = {

"folder_path": json.dumps(folder_path),

"skip_notebookprebronze": json.dumps(skip_notebookprebronze),

"model_file": json.dumps(model_file),

"layers": json.dumps(layers)

}

notebookutils.notebook.run("DAG_Multiloader", 83200, params)

I want to load just one object for the Bronze and Silver layer, including pre bronze notebooks

import json

# DAG Multiloader

folder_path = "Files/Objects/MyObject/MyTable.yaml"

skip_notebookprebronze = False

model_file = "Files/Model/DM/model.yaml"

layers = ["Bronze", "Silver"]

params = {

"folder_path": json.dumps(folder_path),

"skip_notebookprebronze": json.dumps(skip_notebookprebronze),

"model_file": json.dumps(model_file),

"layers": json.dumps(layers)

}

notebookutils.notebook.run("DAG_Multiloader", 83200, params)

I want to load just one object folder for the Bronze layer, including pre bronze notebooks

import json

# DAG Multiloader

folder_path = "Files/Objects/MyObject"

skip_notebookprebronze = False

model_file = "Files/Model/DM/model.yaml"

layers = ["Bronze"]

params = {

"folder_path": folder_path,

"skip_notebookprebronze": skip_notebookprebronze,

"model_file": model_file,

"layers": json.dumps(layers)

}

notebookutils.notebook.run("DAG_Multiloader", 83200, params)