Blob file trigger

The blob file trigger can be used to automatically trigger events as soon as a file is created to an Azure Blob storage. This can be useful when users or third-parties are delivering files to your dataplatform. In some cases it's not possible to give immediate access to Fabric itself. A separate Azure Blob storage is solid solution in such cases.

In EasyFabric it is possible to define triggers based on events fired by the Azure Event Grid.

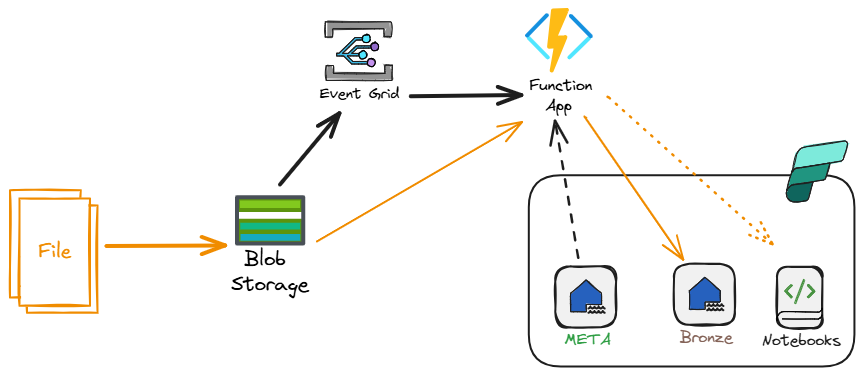

In this case when a file is created on a Azure Blob storage a webhook is send to an Azure Function. The Azure Function will receive a body with information about the event and will handle the event based on the settings in trigger in the configuration. For this solution the following is needed:

- Azure Blob Storage

- Event Grid

- Azure Function

- Fabric environment

- Trigger settings in the config file

How it works

A file is created on Azure Blob storage. This triggers an event that will send a webhook to the Azure Function with information about the file that is created. The function will handle this request as follows:

- Reading the url of the file created

- Read the config.yaml from the Configuration folder in the Meta lakehouse

- Copies the file from the Blob Storage to the Bronze lakehouse

- Starts a notebook configured in the trigger

This happens when the url of the event matches with the trigger configured in the configuration. An example:

blobtriggers:

- blobtrigger: stg

storageAccount: examplestor

container: excel

filter: \.(xlsx)$

notebook: TRG_BlobTriggerExcel

fabricfolder: excel

In the 'excel' container of storage account an Excel file (example.xlsx) is created. This will trigger an event that sends a webhook with the url of the file to the Azure Function.

The Azure Function will read the configuration from the config.yaml from Meta lakehouse.

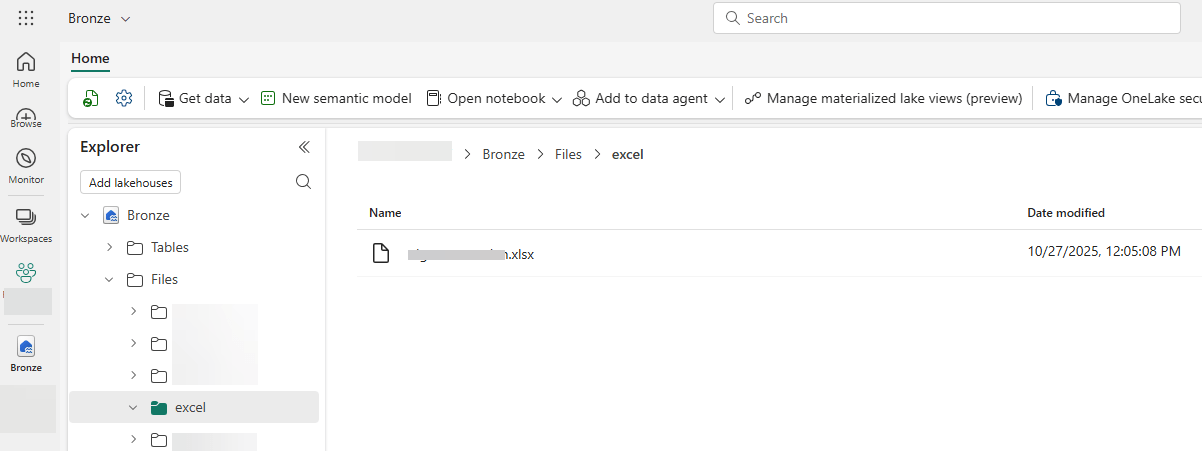

The filter will check if the file ends with xlsx (filter is optional). Since there is a match, the file will be copied from the blob storage into the Bronze lakehouse into the Files section, in the folder named 'excel'. If this folder does not yet exists, the folder will be created. If the file already exists, it will be overwritten.

Once the file is created in Fabric, a notebook named TRG_BlobTriggerExcel will be executed. The Function will send the following parameters to the notebook as well (notebook is optional):

storageAccount = "examplestor"

container = "excel"

blobPath = "example.xlsx"

lakehousePath = "Files/excel/example.xlsx"

workspace = "WS_EXAMPLE"

workspace_id = "redacted"

bronze_lh_id = "redacted"

target_path = "excel/Weergave.xlsx"

You may use the information in this notebook as needed.

Setup

Azure Function

Create an Azure Function with the following configuration:

- Flex Consumption

- Python 3.12

- Instance size 2048 (default is fine)

- Other settings can be left to Default or setup as needed for your specific situation (like networking)

Once the Azure Function is created, you need to set Identity -> System Assigned to On. This will create a new Enterprise Application in Entra. Go to Entra and look for this identity. This identity needs at least the following access to process the files:

- Storage Blob Data Contributor on the Azure Blob storage

- Access to the Fabric Workspace of the Meta and Bronze Lakehouse

- Add the identity to the security group that can use Fabric admin API's

Deploy the Azure Function from the EasyFabric project. The Function will have the following custom app settings:

META_LAKEHOUSE_NAMEMETA_CONFIG_PATH(optional, default=Configuration/Config.yaml)FABRIC_WORKSPACE

The values will be deployed as well and retrieved from the configuration file.

Deploy Azure Function

The Azure Function can be deployed using Azure DevOps. The deployment pipeline is located in the Yml project folder and is named function_deploy.yaml. Before deployment, ensure that the configuration file contains the correct settings. Below is an excerpt of the required configuration:

environment: DEV

environmentDescription: Development

resourceGroupName: RG-Example

functionAppName: func-example

..........

lakehouses:

- layer: Meta

workspace: <workspace>

lakehouse: Meta

Event on storage

Now the function is deployed, an event has to be added to the storage account.

In Azure Portal, go to the storage account, on the menu there is an option, called Events:

- Click on '+ Event subscription' on the top

- Fill in the form

- Event types: Blob created

- Endpoint type: Webhook

- Click on configure an endpoint

- Set the url of the Azure Function

- Will look something like: https://yourfunction.region.azurewebsites.net/api/blobevent?code=key -Optionally it is possible to already filter on stuff here or do this in the yaml configuration of the trigger. Mix and match according to your specific needs and test it.

Once the trigger is created, upload a file to the blob storage and see it arrive in Microsoft Fabric.

In the Bronze lakehouse on Fabric you will find this: